About

I am a third-year PhD candidate in the Sheffield NLP Group, focusing on cross-lingual transfer and efficient language modeling. I have 7+ years of combined academic and industry experience in NLP and hold an MSc in Computer Science with Speech and Language Processing (Distinction) from the University of Sheffield (2020). From 2021 to 2023, I was a researcher in the R&D Group at Hitachi, Ltd. (Japan), working on information extraction and efficient language model development. My research has been published at top-tier NLP/ML conferences and journals, including ACL, EMNLP, TMLR, and Computational Linguistics. On top of the research activities, I also contribute to the community as a member of the ACL Rolling Review (ARR) support team.

News

-

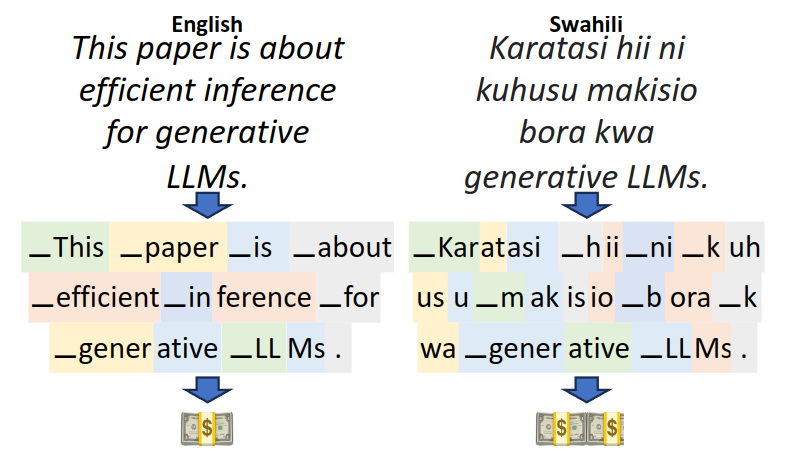

Our paper on extremely low-resource vocabulary expansion has been accepted to Computational Linguistics! See here for the preprint.

-

Our paper on vocabulary expansion of chat models has been accepted to Transactions on Machine Learning Research (TMLR)! See here.

-

I will be co-chairing the EACL 2026 Student Research Workshop. Call for papers will be out soon!

-

Updated CV. See here.

Research Interests

Cross-lingual Transfer, Language Modelling, Natural Language Understanding, Natural Language Processing, Machine Learning

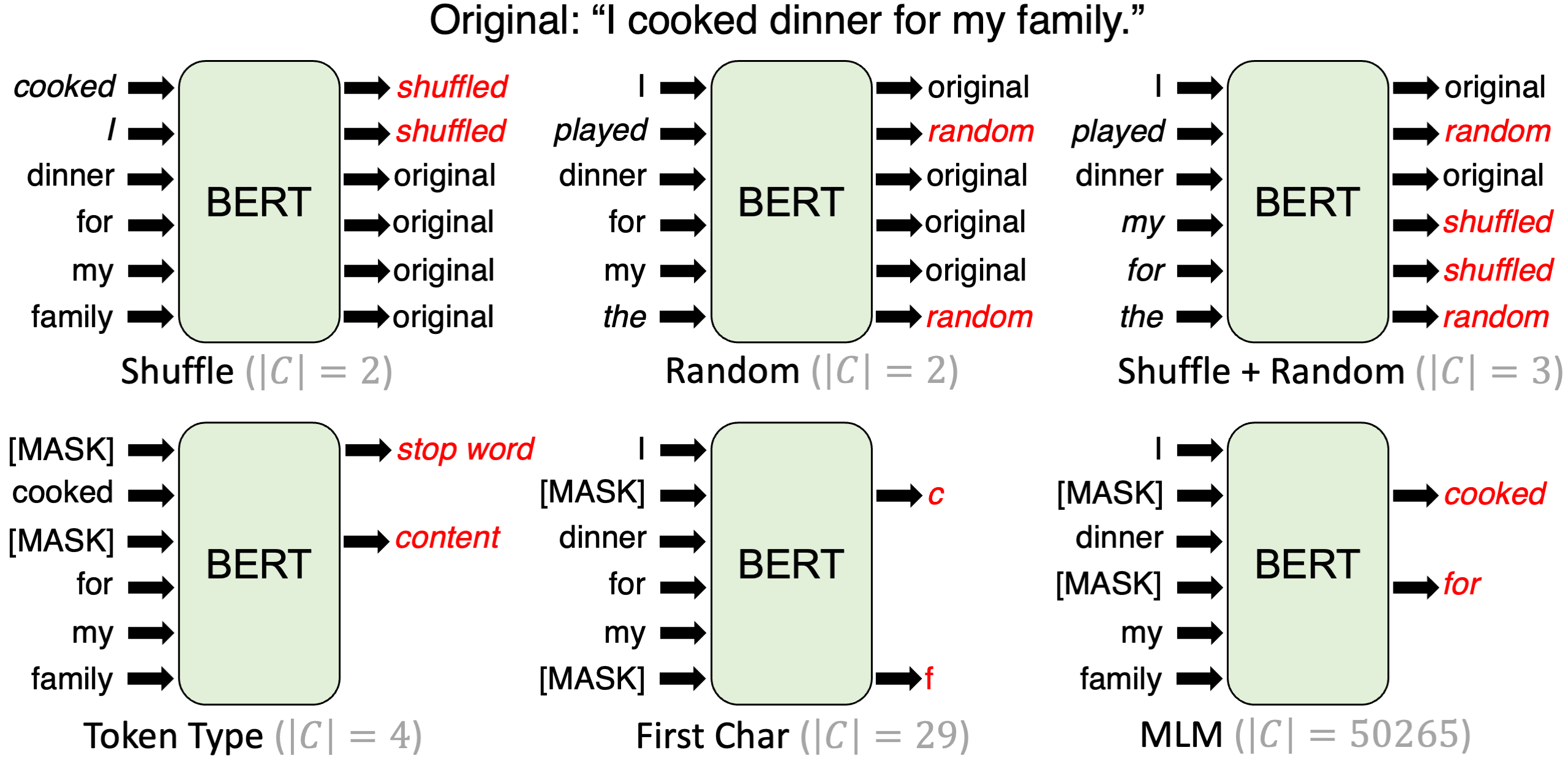

Recent Work

Links

Contact

You can send me messages via this contact form.